Welcome to my in-depth guide to technical SEO - a topic that is often overlooked in digital marketing, but is crucial to the success of your website. Technical SEO may seem dry at first glance, but trust me, the impact on your search engine visibility is immense. In this article, we'll dive deep into the topics of Crawlability, Indexing, Schema.org and Core Web Vitals and look at how you can optimize these aspects of your website. I'll show you how you can use both Yoast SEO and RankMath to take the technical SEO of your WordPress website to the next level. If you need basic information on search engine optimization, I recommend my comprehensive Yoast SEO guidethat lays the foundation.

Technical SEO 2024: Comprehensive guide to website optimization

Optimize your website for 2024: Crawlability, indexing, Schema.org and Core Web Vitals.

Course Provider: Person

Course Provider Name: Saskia Teichmann

Course Provider URL: https://www.saskialund.de/

Course Mode: Online

Course Workload: PT01H

Course Type: Free

Crawlability: The basis for successful indexing

Crawlability is one of the most important, but often overlooked, aspects of technical SEO. Without a well-structured and crawlable website, search engines cannot properly capture your content, which means your pages may not appear in search results at all. Crawlability refers to the ability of search engine bots to crawl your website and understand which pages should be indexed. Let's dive deep into this topic to make sure your website is perfectly prepared for search engines.

Understanding how search engines crawl

Search engines, especially Google, use so-called "crawlers" or "bots" to search the internet. These bots follow the links on your website to discover and index new content. The crawler usually starts on your homepage and then follows the internal links to other pages. Therefore, clean and well-structured internal linking is crucial for successful crawlability.

Important points:

- Robust internal linking: Make sure that every important page on your website can be reached by at least one internal link. Use a flat page hierarchy so that no important page is more than three clicks away from the homepage. Read more about internal linking in my comprehensive Yoast SEO guide.

- Clean URL structure: Your URLs should be short, descriptive and free of unnecessary parameters. Avoid dynamic URLs, which are often created by session IDs or filter parameters, as these are more difficult for crawlers to understand.

Optimizing the Robots.txt file

The Robots.txt file is a small text document that is stored on your server and instructs search engines which parts of your website may and may not be crawled. A correctly configured Robots.txt document can prevent unimportant pages such as admin areas, internal search results or other non-relevant pages from being crawled.

Steps towards optimization:

- Restrict access: Block unimportant or duplicate page content. This could, for example, affect pages with session IDs, internal search pages or duplicate category pages.

- Allow access: Make sure that no important pages are inadvertently blocked. This can easily happen if a general rule is too strict.

- Use the Robots.txt tester: Use the Robots.txt test tool in Google Search Console to make sure your file is working correctly and not blocking any important areas.

Reading tip:

Google Search Central: Robots.txt Introduction - An official resource from Google that explains how to correctly set up and optimize your Robots.txt file.

XML sitemaps and their role in crawlability

An XML sitemap is a file that provides search engines with an overview of all indexable pages on your website. These sitemaps help Google and other search engines to discover and crawl your pages faster, especially if your website has many pages or is new.

Best Practices:

- Automatic sitemap creation: Use SEO plugins such as Yoast SEO or RankMath to automatically generate and update your XML sitemap. These plugins ensure that all new pages are automatically added to the sitemap. You can find more tips in my Yoast SEO guide.

- Submit sitemap: Submit your sitemap to Google Search Console to ensure that it is crawled. This is especially important if you make major changes to your website.

- Sitemap structure: Make sure that your sitemap is not overloaded. A typical sitemap should not contain more than 50,000 URLs. If it does, you should split it into several sitemaps.

Reading tip:

Moz: The Importance of XML Sitemaps - A guide to the importance of XML sitemaps and their optimization.

Avoidance of crawling traps

Crawling traps are pages or links on your website that keep crawlers "trapped" for an unnecessarily long time without any relevant content being discovered. These traps can lead to important pages not being crawled because the crawler wastes its time in less relevant places.

Examples and solutions:

- Internal search pages: Prevent your internal search results pages from being indexed by crawlers by blocking them in the Robots.txt file.

- Pagination: If your website uses pagination (e.g. in a blog), make sure that it is configured correctly and does not cause endless loops. Read more about this in my Yoast SEO guide.

- Infinite Scroll: If your website uses an infinite scroll function, make sure that all content is also accessible via normal links.

Reading tip:

Search Engine Journal: Avoiding Crawl Traps - How to recognize and avoid crawling traps.

Efficient use of crawl budget

The crawl budget refers to the number of pages that a crawler searches during a visit to your website. Websites with many pages or high complexity must take special care not to waste their crawl budget.

Strategies for optimization:

- Use of Noindex: Set the

noindex-attribute for pages that should not appear in the search results in order to save the crawl budget. - Avoidance of duplicate content: Make sure that there is no duplicate content on your website that unnecessarily takes up the crawl budget.

- Prioritization of important pages: Prioritize the most important pages so that they are crawled and updated more frequently.

Reading tip:

Ahrefs Blog: Crawl Budget Optimization - A deep insight into the importance and optimization of the crawl budget.

Analyze crawl statistics

An important step towards optimizing crawlability is the regular analysis of your crawl statistics in the Google Search Console. These show you how often your website is crawled, which pages receive the most traffic and whether there are any problems with crawling.

Tips for analysis:

- Regular review: Take a look at the crawl statistics at least once a month to recognize changes in crawler activity.

- Identify problems: Watch out for sudden drops in crawl rates or repeated errors that could indicate that certain pages are difficult for the crawler to access.

- Take optimization measures: If problems are identified, adjust your Robots.txt file, sitemap or internal linking accordingly.

Reading tip:

Google Search Console Help: Crawl Stats - Instructions for analyzing crawl statistics in the Google Search Console.

Conclusion on the crawlability of your website

Good crawlability is the key to successful indexing of your website and therefore to visibility in search results. If you implement the measures described here, you will ensure that search engines can capture your content efficiently and completely. Use the tools and functions offered by Yoast SEO, RankMath, other comparable on-page SEO tools and the Google Search Console to continuously monitor and improve the crawlability of your website.

Indexing: How to put your content in the spotlight

Indexing is a crucial step in search engine optimization. While crawlability ensures that search engines can find your pages, indexing ensures that these pages appear in the search results. If a page is not indexed, it will simply be ignored by search engines - regardless of how good the content is. Let's take a deep dive into indexing and make sure your content gets the attention it deserves.

Understanding the basics of indexing

Indexing is the process by which search engines add the pages found on a website to their index. This index is a huge database that contains all the pages that Google & Co. can display in the search results. Each indexed page is evaluated based on the information stored in the search engine index and displayed for relevant search queries.

Important aspects:

- Googlebot and other search engine crawlers: When the Googlebot searches your website, it decides which pages to include in the index. Factors such as the relevance of the content, the technical structure of the page and the user experience all play a role.

- Non-indexed pages: Pages that are not indexed cannot be found by users via search engines. These pages have no influence on the search engine ranking of your website.

Indexing settings in Yoast SEO and RankMath

Both Yoast SEO and RankMath offer user-friendly ways to control the indexing of your pages. These tools allow you to define exactly which pages, posts, categories and tags should appear in the search results and which should not.

Yoast SEO:

- Step 1: In your WordPress dashboard, go to "SEO" > "Appearance in search".

- Step 2: Select the "Content types" tab to control the indexing of pages and posts.

- Step 3: Specify whether individual pages or post types should be displayed in the search results and adjust the settings as required.

RankMath:

- Step 1: Navigate to "RankMath" > "Title & Meta".

- Step 2: Select the appropriate tab for posts, pages or media.

- Step 3: Use the "Index" settings to specify which content should appear in the search results.

Tip: If you want to remove a page or a post from the index, set the "noindex" tag in the settings of the respective plugin.

The meaning of the noindex tag

The noindex-tag is a powerful tool in SEO that you can use to exclude certain pages from indexing. This can be useful to avoid duplicate content or to keep irrelevant pages out of the search results.

Application examples:

- Internal search results pages: These pages offer no added value for users searching via Google and should therefore be marked with

noindexbe provided. - Duplicate or similar pages: If you have similar content on different pages, use

noindexto prevent Google from indexing duplicate content. - Sensitive pages: Pages such as "Data protection", "General terms and conditions" or "Login" pages should generally not be indexed.

Best Practices:

- Control through the Google Search Console: Check the Google Search Console regularly to see which pages are indexed and whether

noindexis used correctly. - Strategic application: Set

noindexin order to use the crawl budget efficiently and to concentrate indexing on the most important pages.

Avoidance of duplicate content

Duplicate content is one of the biggest enemies of clean indexing. Search engines are designed to favor unique and valuable content. If your website contains multiple pages with identical or very similar content, this can lead to problems such as downgrading in search results or the complete removal of these pages from the index.

Strategies for avoidance:

- Canonical URLs: Use the canonical tag (

rel="canonical") to indicate which version of a page is the primary one. This is particularly useful if similar content is accessible via different URLs. - Categorization and tagging: Avoid repeating the same content on multiple category or tag pages. Instead, use canonical tags or the

noindex-attribute for these pages. - Content management: Check your content regularly to ensure that there are no duplicate or redundant pages.

Reading tip:

Moz: The Definitive Guide to Duplicate Content - A comprehensive guide to help you avoid duplicate content and keep your website clean.

Optimization of high priority pages

Not all pages are equally valuable. Some pages, such as your homepage, important product pages or blog posts that regularly generate a lot of traffic, should be prioritized in your SEO strategy.

Best Practices:

- Manual indexing requests: Use the "URL check" function in Google Search Console to ensure that your most important pages are indexed correctly. Submit them manually for indexing if they are not yet indexed.

- Content optimization: Ensure that high priority pages are regularly updated and optimized to maintain their relevance.

- Internal linking: Link regularly from new posts or important pages to these prioritized pages to boost their visibility and ranking in search results.

Reading tip:

Search Engine Journal: Prioritizing Content for SEO Success - A guide to prioritizing your content for maximum SEO results.

Google Search Console: Your dashboard for indexing

The Google Search Console is one of the most powerful tools available to you to monitor and optimize the indexation of your website. The index check functions and coverage reports give you detailed insights into the indexing status of your pages.

Important functions:

- URL check: Check whether a specific URL is indexed and whether there are any problems with indexing.

- Coverage report: See which pages have been indexed, which errors have occurred and which pages have been excluded from indexing.

- Manual indexing requests: Use this function to have changed or new pages indexed quickly.

Reading tip:

Google Search Console Help: Index Coverage Report - A detailed guide to using the coverage report in Google Search Console.

Conclusion on the topic of indexing

Indexing is key to ensuring that your content appears in search results and can be found by users. By strategically managing indexation with tools like Yoast SEO and RankMath, avoiding duplicate content and prioritizing your most important pages, you can ensure that your website performs optimally. Use Google Search Console to regularly monitor the indexing status of your pages and make adjustments where necessary. You can find more detailed information in my Yoast SEO guide.

Schema.org: Structured data for better results

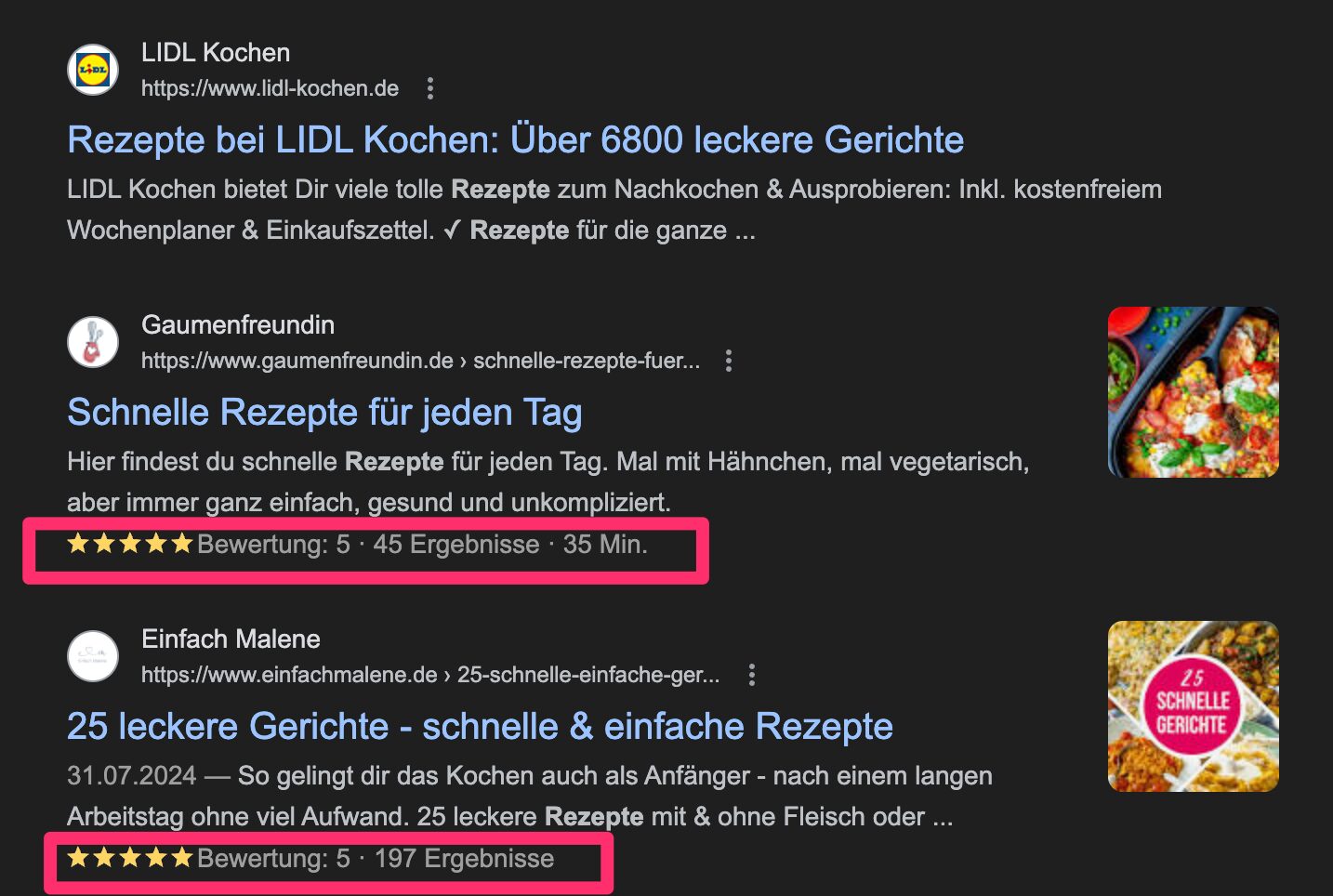

Structured data is a central component of modern search engine optimization. It enables search engines to better understand the content of your website and display it in an appealing form in the search results. By implementing Schema.org markup, you can create rich snippets that increase your click-through rate (CTR) and visibility.

What is structured data and why is it important?

Structured data are standardized formats that provide information about a page and the elements it contains. Search engines use this data to better capture the context of content and visually enhance it in the search results. Examples include ratings, prices, FAQs or event data, which can be displayed directly in the search results.

Advantages:

- Improved visibility: Pages with structured data have a higher chance of being displayed as rich snippets, which increases user attention.

- Higher click rate: Rich snippets offer additional added value in the search results and encourage users to click on your result.

- More relevant search results: Structured data helps search engines to better interpret the content of your site and make it more relevant for suitable search queries.

How to insert structured data with Yoast SEO and RankMath

Both WordPress plugins, Yoast SEO and RankMath, offer user-friendly ways to integrate structured data into your website. These tools make it easy to implement schema markup without the need for in-depth technical knowledge.

Yoast SEO:

- Step 1: In the WordPress dashboard, navigate to "SEO" > "Display in search".

- Step 2: Under "General" you can define basic information such as the website type (organization or person) and your company logo.

- Step 3: In the "Content types" tab, you can activate and customize schema markup for specific content types (e.g. posts, pages).

RankMath:

- Step 1: Go to "RankMath" > "Schema".

- Step 2: Choose the right type for your content from a list of ready-made schema types, such as "Article", "Product" or "FAQ".

- Step 3: Customize the schema by filling in specific fields such as author, publication date and other relevant information.

Tip: Use Google's schema markup validation tools to ensure that your structured data is implemented correctly.

Important schema types and their application

There are many different Schema typesthat you can use depending on the content of your website. Here are some of the most important ones:

- Article (Article): Ideal for blog posts, news articles and instructions. This scheme highlights the author, the publication date and possibly an image.

- Organization: Displays information about your company, such as the company name, logo and contact information, directly in the search results.

- Product (Product): Particularly useful for e-commerce sites. It contains information such as price, availability and ratings.

- FAQ (Frequently Asked Questions): This scheme allows you to display frequently asked questions and answers directly in the search results.

- Ratings (Review): Displays user reviews and a review summary, which is particularly valuable for products, services or local businesses.

Reading tip:

Google Developers: Introduction to Structured Data - A detailed introduction to the functionality and implementation of structured data.

Creation of user-defined schemes

While pre-built schema types are useful, there may be situations where you need to create a custom schema. RankMath offers excellent flexibility here to create specific markups that are tailored to your content.

Steps for creating a user-defined scheme:

- Step 1: In RankMath, select "Schema" > "Custom Schema".

- Step 2: Define the name and type of schema you want to create.

- Step 3: Add the relevant fields by entering them manually or selecting them from a list of predefined options.

- Step 4: Save and test the custom schema with a rich results test tool.

Tip: If you need more complex custom schemas, you can also use JSON-LD to insert the markup directly into the HTML code of your page.

Reading tip:

Schema.org: Custom Schemas Guide - A guide to creating custom schemas with Schema.org.

Rich snippets and their advantages

Rich snippets are special search results that stand out through structured data. They provide additional context and visual elements that help users make an informed decision before clicking on a search result.

Examples of rich snippets:

- Ratings and stars: Products or services with ratings are visually highlighted in the search results with a star rating.

- Price information: E-commerce sites can display prices directly in the search results, which is particularly useful for price comparisons.

- FAQ snippets: Questions and answers appear directly under the main link, which can significantly increase the click rate.

Advantages:

- Increased click-through rate (CTR): Users are more likely to click on results that are highlighted by rich snippets, as these provide additional information.

- Improved visibility: Rich snippets make your search results more eye-catching and can help you stand out from the competition.

- Better user experience: Users get more information at a glance, which leads to a better user experience.

Reading tip:

Yoast: How to Get Rich Snippets - A comprehensive guide on how to get rich snippets using Schema.org markup.

Testing and validating schema markup

It is not enough to simply implement schema markup on your website. You need to make sure that it works correctly and is recognized by search engines. Google offers tools to test the markup and fix any errors.

Tools for validation:

- Google Rich Results Test: This tool checks whether your structured data has been implemented correctly and is suitable for rich snippets.

- Google Search Console: Use the coverage and rich results reports to identify and resolve issues with your structured data.

Steps for validation:

- Step 1: Visit the Google Rich Results Test Tool and enter the URL of the page you want to test.

- Step 2: Analyze the results and correct any errors that are displayed.

- Step 3: Regularly check the Google Search Console for warnings or errors relating to your structured data.

Reading tip:

Google Developers: Structured Data Testing Tool - A tool for checking and validating your structured data.

Schema.org: Introduction - An introduction to the world of structured data and how it works.

Structured data according to schema.org is essential

Implementing Schema.org markup on your website is one of the most effective ways to improve how your content appears in search results. By using tools such as Yoast SEO and RankMath, you can insert structured data easily and effectively. Rich snippets generated by structured markup offer you the opportunity to significantly increase your visibility and click-through rate. Regularly review and validate your structured data to ensure it is working correctly and giving you the best results. You can find more detailed information in my Yoast SEO guide.

Core Web Vitals: performance meets user experience

Core Web Vitals are an essential part of technical SEO. They measure the loading speed, interactivity and visual stability of your website. Since Google introduced these metrics as a ranking factor, they have been a key aspect of satisfying not only search engines but also users. A fast, stable and user-friendly website ensures higher rankings and a better user experience.

What are the Core Web Vitals?

The Core Web Vitals comprise four main metrics that are crucial for evaluating the user experience:

- Largest Contentful Paint (LCP): Measures how quickly the main content of a page loads. A good LCP value is less than 2.5 seconds.

- First Input Delay (FID): Evaluates the responsiveness of the page. An FID of less than 100 milliseconds is considered good.

- Cumulative Layout Shift (CLS): Measures the visual stability of a page. A stable value is below 0.1, which means that the layout of the page hardly shifts while it is loading.

- Interaction to Next Paint (INP): A new metric that measures how quickly a page responds to user interactions. A value of less than 200 milliseconds is considered good.

Why these metrics are important:

Search engines like Google use these metrics to determine how user-friendly your website is. An optimized website provides a better user experience and will rank higher in search results.

Reading tip:

Google PageSpeed Insights - Analyze the performance of your website and receive concrete suggestions for optimization.

Optimization of Largest Contentful Paint (LCP)

The LCP is one of the most important metrics as it measures the loading time of the main content. The faster the main content loads, the better the user experience.

Steps towards optimization:

- Image compression: Use optimized image formats such as WebP and compress images to shorten the loading time.

- Lazy Loading: Implement lazy loading to load images only when they appear in the visible area.

- Improve server response time: Reduce server response time by using a Content Delivery Network (CDN) and fast servers.

- Elimination of render blocking elements: Avoid unnecessary CSS and JavaScript files that could delay the loading of the main content.

Tip: Use tools like Google PageSpeed Insights or Lighthouse to get specific recommendations on how to improve your LCP.

Reading tip:

Google Developers: Optimize LCP - Detailed instructions for optimizing the Largest Contentful Paint.

Improvement of the First Input Delay (FID)

The FID measures how quickly your website responds to the first user interaction. A fast response time ensures that visitors do not bounce in frustration.

Steps towards optimization:

- Minimization of JavaScript: Reduce the number and size of JavaScript files. Unnecessary JavaScript operations should be avoided.

- Asynchronous loading: Load JavaScript files asynchronously so that they do not block the main page.

- Prioritize resources ready for interaction: Ensure that the elements that users interact with load as quickly as possible, e.g. buttons and forms.

Tip: Reduced code complexity and optimized script execution help to improve responsiveness.

Reading tip:

Google Developers: Optimize FID - Tips and strategies for optimizing the first input delay.

Reduction of the Cumulative Layout Shift (CLS)

The CLS measures the visual stability of a page. If content shifts during loading, this can affect the user experience. A stable page structure is therefore crucial.

Steps towards optimization:

- Placeholder for images and videos: Use fixed dimensions for images and videos to avoid layout shifts.

- Avoidance of dynamic content: Make sure that no new content is added during the loading process that could shift the layout.

- Optimization of fonts: Load fonts in such a way that they do not delay or shift the layout. To do this, use the CSS property

font-display: swap.

Tip: Check your website with tools like Lighthouse to identify and fix specific layout shifts.

Reading tip:

Google Developers: Optimize CLS - Guide to improving the visual stability of your website.

Optimization of the Interaction to Next Paint (INP)

INP is a relatively new metric that measures the responsiveness of a page to user interactions throughout its lifetime. Unlike FID, which only evaluates the first interaction, INP measures the response time for each interaction that occurs after the page is loaded.

Steps towards optimization:

- Reduce JavaScript: Minimize the JavaScript that is executed when clicking, scrolling or during other interactions to avoid delays.

- Prioritize interaction-intensive elements: Ensure that interactive elements such as buttons and menus are prioritized and responded to as quickly as possible.

- Optimization of the main thread: Keep the main thread free of long tasks that could impair responsiveness.

Tip: Use Lighthouse or Chrome DevTools to monitor and optimize the INP values of your site.

Reading tip:

Google Developers: Optimize INP - A guide to optimizing interaction times on your website.

Tools for checking and optimizing the Core Web Vitals

The optimization of Core Web Vitals requires continuous monitoring and adjustments. Use specialized tools that provide you with detailed insights and recommendations for action.

Recommended tools:

- Google PageSpeed Insights: Provides a comprehensive overview of the core web vitals of your website and gives targeted optimization tips.

- Lighthouse: An open source tool from Google that is integrated into Chrome. It analyzes your website and provides detailed reports on all four metrics.

- Google Search Console: The "Core Web Vitals" report in the Search Console shows how well your pages are performing in the Core Web Vitals and highlights any problems.

Tip: Combine these tools to obtain a comprehensive analysis and carry out targeted optimizations.

Reading tip:

Ahrefs Blog: Comprehensive Guide to Core Web Vitals - A comprehensive guide to optimizing core web vitals.

The role of hosting and server optimization

One of the often overlooked factors in optimizing Core Web Vitals is the quality of the hosting and the configuration of the server. A fast server and an optimized server configuration can significantly reduce loading times.

Best Practices:

- Choosing the right hosting: Use high-performance hosting that is optimized for speed and stability.

- Server caching: Implement server caching to shorten loading times. Tools such as Varnish Cache can help with this.

- Use HTTP/2 and HTTP/3: Make sure your server supports HTTP/2 or HTTP/3 to improve loading speed and increase efficiency.

Tip: Make sure that your hosting provider offers regular updates and security patches to ensure the performance and security of your website.

Reading tip:

Search Engine Journal: Server Optimization for Core Web Vitals - A guide to optimizing your server for better web vitals.

How to specifically improve core web vitals and optimize your website in the long term

Core Web Vitals play a central role in evaluating the user experience on your website. Continuous optimization of these metrics not only ensures better search engine rankings, but also higher visitor satisfaction. By focusing on improving loading speed, interactivity and visual stability, you create a solid foundation for the success of your website.

Use the strategies and tools presented here to take targeted measures to optimize your Core Web Vitals. Remember that a technically flawless and user-friendly website not only meets current requirements, but can also better cope with future developments in digital marketing.

Don't miss the opportunity to take your website to the next level - both in the eyes of users and search engines. You can find more detailed information and specific instructions in my Yoast SEO guidewhich helps you to strengthen your overall SEO strategy.

Technical SEO checklist for 2024: step-by-step guide

I have put together a detailed technical SEO checklist for you to ensure that your website is technically optimized and meets the latest SEO standards. This guide will help you to check and optimize all important aspects of technical SEO. Each step is designed to make your website accessible and performant for search engines and users alike:

Tip 1: Update your sitemap regularly

- Step 1: Open your WordPress dashboard and navigate to the settings of the SEO plugin, either Yoast SEO or RankMath.

- Step 2: Make sure that the XML sitemap is activated and that all relevant pages, posts, categories and tags are covered.

- Step 3: Check whether the sitemap has been submitted correctly in the Google Search Console. You can check this under "Index" > "Sitemaps" in the Search Console.

- Step 4: Update the sitemap manually if you have made major changes to your website, such as adding or deleting pages.

Tip: Don't forget to submit the sitemap to other search engines such as Bing and Yandex to maximize indexing.

Tip 2: Check Robots.txt

- Step 1: In the WordPress dashboard, go to "SEO" > "Tools" (Yoast SEO) or "RankMath" > "General settings" > "Edit Robots.txt".

- Step 2: Check which areas of your website are blocked or released for search engines.

- Step 3: Make sure that no important pages, such as your main categories or landing pages, are inadvertently blocked.

- Step 4: Test your Robots.txt file with the Google Search Console Robots.txt tester to make sure it doesn't contain any errors.

Note: Make sure that sensitive areas of your website, such as the admin directory or certain private content, are excluded from indexing.

Tip 3: Monitor indexing status

- Step 1: Log in to the Google Search Console and go to the "Coverage" report under "Index".

- Step 2: Check which pages have been indexed and which errors or warnings have occurred.

- Step 3: Correct errors such as "Page not found" (404) or "Page blocked by Robots.txt" to ensure that important content is indexed.

- Step 4: Submit individual pages manually for indexing if you have made major changes and want to ensure that they are recognized quickly.

Tip: Use the "URL check" function in the Google Search Console to check the current indexing status of a specific page.

Tip 4: Use structured data

- Step 1: Navigate to the SEO plugin settings (Yoast SEO or RankMath) and open the section for structured data or schema markup.

- Step 2: Select the appropriate scheme for your content, e.g. "Article", "HowTo", "FAQ" or "Product".

- Step 3: Fill in the relevant fields, such as title, description, author, publication date and any additional information (e.g. rating, price).

- Step 4: Test your structured data with the Google Rich Results Test Tool to ensure that it is correctly implemented and suitable for rich snippets.

Note: Structured data can significantly improve the presentation of your website in the search results and increase the click-through rate.

Tip 5: Optimize Core Web Vitals

- Step 1: Visit Google PageSpeed Insights and enter the URL of your website to check the Core Web Vitals.

- Step 2: Analyze the three main metrics: Largest Contentful Paint (LCP), First Input Delay (FID) and Cumulative Layout Shift (CLS).

- Step 3: Reduce the loading time of your website by taking measures such as compressing images, minifying CSS and JavaScript and using a content delivery network (CDN).

- Step 4: Optimize the interactivity of your website by reducing unnecessary JavaScript loads and ensuring that important resources are loaded with priority.

- Step 5: Minimize layout shifts by defining fixed sizes for images and videos and loading fonts efficiently.

Tip: Use the Lighthouse browser tool for more detailed reports and specific optimization suggestions.

Your key to a successful SEO strategy: Mastering technical optimizations

Technical SEO may seem complex, but it is the foundation of any successful SEO strategy. By optimizing your website's crawlability, indexing, Schema.org and Core Web Vitals, you lay the foundation for better visibility and higher search engine rankings. Use the powerful tools offered by Yoast SEO and RankMath to master these technical aspects and strengthen your website in the long term.

By continuously making these optimizations, you not only ensure immediate improvements, but also prepare your website for future challenges. If you want to dive deeper into the technical basics, take a look at my detailed Yoast SEO guide - Your next step to long-term SEO success.

Further reading tips:

- Google Search Central: Crawling and Indexing

- Moz: The Beginner's Guide to SEO

- Schema.org: Introduction

- Google PageSpeed Insights

- Search Engine Journal: Technical SEO Guide

Would you like to delve deeper into a particular topic or do you have specific questions? Use the comment function, I look forward to your thoughts and questions!

0 Comments